Legislation against disinformation: Role of Digital Services Act in EU, prospects for Ukraine

Coverage of Russian propaganda even increased during the first half of 2023 due to changes in ex-Twitter security standards.

Having assumed a commitment to fight Russian propaganda in early 2022, big tech platforms, in the end, turned out to be rather helpless last year. EU elections in June 2024 will be the target of the Russian information warfare. In response, Brussels developed new policies and legislation (Digital Services Act and Digital Markets Act) to avoid the dangers that would arise in the information space due to Russia’s interference. The Centre’s team decided to study Europe’s plans to protect its information space and to find out what Ukraine can use in its realities.

Digital platforms, namely 34 stakeholders, signed the Code of Practice on Disinformation in June 2022 to the digital services act, at the request of the European Commission. This was the first precedent when industry players agreed to self-regulatory standards to combat disinformation. The Code covered 44 commitments and 128 specific actions in the following areas:

● Control over ad placement (including reducing financial incentives for disinformation distributors);

● Ensuring transparency of political ads;

● Integrity of services;

● Empowering users;

● Providing wider access to data for researchers;

● Enhancing cooperation with fact-checking communities.

However, in practice, the platforms did not implement these measures to effectively counter disinformation, as it soon turned out. Ex-Twitter, even more so, arbitrarily left the list of signatories of the Code in May 2023.

Shortly after the Code of Practice on Disinformation, the EU adopted the Digital Services Act, which came into force in 2023. Essentially, this act aims to promote a safer online environment, and online platforms must continually provide users with the tools to do so.

Platforms covered by the Digital Services Act

The EU defines large online platforms (or large online search engines) as having more than 45 million users in the EU. Currently, there are 19 stakeholders, such as Alibaba AliExpress, Amazon Store, Apple App Store, Booking.com, Facebook, Google Play, Google Maps, Google Shopping, Instagram, LinkedIn, Pinterest, Snapchat, TikTok, Twitter, Wikipedia, YouTube, Zalando, Bing, and Google Search.

Google thus expanded its transparency centre in August in response to the new law. Meta also introduced new additional tools for transparency and promised to expand the customization of its advertising cabinet. TikTok also changes its advertising policy and introduces its algorithm on optional terms (the content in the feed can be presented both in chronological order and according to the preferences of a particular user).

Since February 2023, the Digital Services Act covers a variety of online platforms, regardless of their size.

What happens if these platforms don’t follow the rules?

Online platforms that do not comply with the rules of the Digital Services Act can be fined up to 6% of their worldwide revenue. According to the European Commission, the Digital Services Coordinator and the Commission will have the right to “demand immediate action if necessary to remedy very serious harm.” A platform that constantly refuses to comply with the requirements of the law might temporarily suspend its work in the EU. However, there are opinions that the new law is unlikely to have a large-scale effect on large online platforms.

How can the digital services act protect against the Kremlin’s disinformation campaigns?

In the EU, the reach of Moscow-sponsored disinformation has increased since February 2022. The largest audience of Russian disinformation is recorded on Meta platforms, while the number of accounts on Telegram that are supported by the Kremlin has tripled, according to an August report by the European Commission. The regulations introduced by the Digital Services Act have great potential to combat the Kremlin’s disinformation, but they must be applied quickly and effectively.

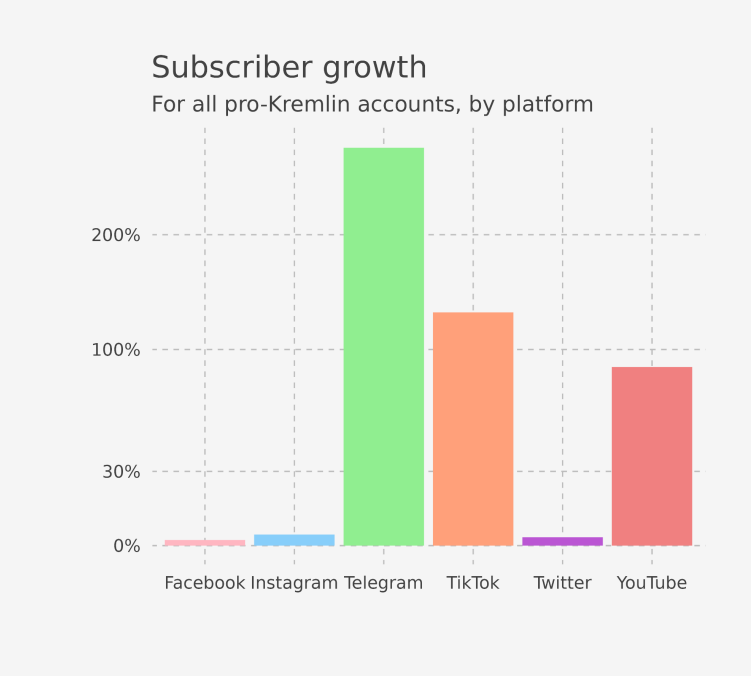

Subscriber growth on all pro-Kremlin accounts for the period from February 2022 to December 2022 by platform

(Source: Digital Services Act: Application of the risk management framework to Russian disinformation campaigns)

Although tech companies have used different risk assessment techniques to ensure human rights, trust, and security, the standards cited in the Digital Services Act currently apply only to platforms and search engines with more than 45 million users in the EU. This requirement is the fact of the first regulatory requirements to assess the risks associated with online content and online behaviour.

However, such requirements will not be the last since other countries are also introducing similar rules. From Singapore’s Online Safety Act, passed last year and put into effect in the future, to the long-awaited UK Internet Safety Act, risk assessment is becoming a common part of many content regulatory regimes. From Brazil to Taiwan, legislative proposals have adopted the concept of “systemic risk,” proposed by the Digital Services Act.

The Digital Services Act defines four categories of systemic risk:

● Illegal content;

● Negative impact on the exercise of fundamental rights;

● Negative impact on public security and electoral/democratic processes;

● Negative impact on public health protection, with a specific focus on minors, physical and mental well-being, and gender-based violence.

Illegal content

Article 34 of the EU Digital Services Act refers to the dissemination of illegal content, namely:

“Relevant national authorities should be able to issue such orders against content considered illegal or orders to provide information on the basis of Union law or national law in compliance with Union law, in particular the Charter, and to address them to providers of intermediary services, including those established in another Member State.”

The Kremlin’s disinformation campaign during the war in Ukraine significantly increases the risk of illegal content appearing on online platforms. There is a threat of an increase in publications that sponsor aggression on the Internet. The European Commission’s August report shows that Kremlin disinformation contributes to the spread of illegal content, including calls for violence and other violations.

_______________________________________________________________________

The share of toxic and potentially illegal comments on posts from pro-Kremlin accounts skyrocketed between February and April 2022. The researchers used the Perspective API for automated content analysis of comments from pro-Kremlin accounts, which assesses the propensity to provoke an offence before and after the full-scale invasion. The report data show a 120% increase in toxic posts on Twitter and a 70% increase on YouTube.

_______________________________________________________________________

Under whose legislation is the illegality of the distributed content determined?

At first glance, the distribution of illegal content seems to be an obvious aspect of systemic risks, as companies have been struggling with illegal content for many years under European law.

However, it is important to find out on the basis of which legislation the content is considered illegal and should be considered within the risk assessment of the Digital Services Act. Does this only apply to content that is illegal in all European countries, such as content related to terrorism or copyright infringement? Which measures should be taken when jurisdictions have different approaches to defining and interpreting illegal content?

These questions still need to be answered.

Negative impact on the exercise of fundamental rights

The Kremlin’s disinformation campaign poses significant threats to basic human rights by disseminating wide-ranging discriminatory content that degrades and dehumanizes certain groups or individuals based on their nationality, sex, gender, or religion.

Thus, the Detector Media team analyzed 45,000 posts in the Ukrainian and Russian segments of such platforms as Facebook, Instagram, Twitter, YouTube, and Telegram for the period from February 28 to August 28, 2022. Their main goal was to identify exacerbations among Ukrainian society and aggravate such splits. Moreover, the exploitation of gender and sexuality is one of the main objectives in the concept of the “Russian world.”

Negative impact on public security and electoral/democratic processes

The Kremlin’s disinformation campaign carries a significant risk of negative consequences not only for public security, but also for electoral and democratic processes. International human rights law provides that both types of risks can arise when deceptive methods are used to deceive citizens to prevent them from fully exercising their rights. For example, this may relate to the right to vote, the right to freedom of expression, the right to freedom of information, and the right to freedom of opinion.

In its General Comment No. 25 to Article 25 of the International Covenant on Civil and Political Rights, the UN Human Rights Committee notes that “Voters should be able to form opinions independently, free of violence or threat of violence, compulsion, inducement or manipulative interference of any kind.” In her Report on Disinformation and Freedom of Opinion and Expression during Armed Conflicts, the UN Special Rapporteur for Freedom of Opinion and Expression emphasizes that “access to diverse, verifiable sources of information is a fundamental human right” and “an essential necessity for people in conflict-affected societies.” It also indicates that any manipulation of the thinking process, such as indoctrination or “brainwashing” by State or non-State actors, violates freedom of opinion. Content curation through powerful platform recommendations or microtargeting, which plays an important role in exacerbating disinformation and political tensions, is an unauthorized interference with users’ internal thinking processes in the digital environment. Thus, it violates the right to freedom of expression.

The Kremlin’s disinformation campaign employs a variety of behaviors and content types on online platforms that mislead users about vital public issues in wartime and artificially increase the Kremlin’s disinformation reach through a variety of unverified sources of information. Failure to curb the Kremlin’s disinformation campaign could pose significant risks to electoral and democratic processes. Above is a general overview of the types of behavior and content used by pro-Kremlin accounts that create potential for systemic risks to both electoral and democratic processes.

Which legislative tools does Ukraine have to regulate the digital environment?

Ukraine might experience “wave” effects because the process of harmonization of our legislation with the EU legal system is one of the conditions for European integration. Thanks to the Ministry of Digital Transformation of Ukraine, we have laws On Cloud Services, On Stimulating the Development of the Digital Economy in Ukraine, the committee is working on a draft law on the protection of personal data.

To counteract disinformation, Ukraine has legislation that provides for civil liability, namely:

● Article 32 of the Constitution of Ukraine: “Everyone is guaranteed judicial protection of the right to rectify incorrect information about himself or herself and members of his or her family, and of the right to demand that any type of information be expunged, and also the right to compensation for material and moral damages inflicted by the collection, storage, use and dissemination of such incorrect information.”

● The Law On Information, Article 278 of the Civil Code of Ukraine, which tries to find a balance between freedom of speech and preventing the exercise of such freedom to the detriment of legitimate interests, rights and freedoms of individuals and legal entities.

Administrative liability is provided for by the Law On Television and Radio Broadcasting, the Law On Print Media (Press) in Ukraine. The Criminal Code of Ukraine provides for criminal liability. Thus, Article 109 provides for liability for public calls for the overthrow of the state system by organized groups (“bot farms”). However, none of these regulatory acts provides for the liability of large online platforms and large search engines for the dissemination of disinformation.

In August 2023, the Verkhovna Rada adopted the “European Integration” law On Digital Content and Digital Services, introduced the term “digital item,” “digital content,” “digital service.” The law is aimed at protecting the rights of consumers when purchasing and using digital content or services. Therefore, this law regulates the supply to consumers of:

● digital content on a physical data carrier;

● software and applications, including their development in accordance with the requirements of the consumer;

● software as a service;

● media content in electronic format;

● streaming services;

● digital services and content on social networks and in the cloud;

● online games;

● file hosting services, etc.

However, this law does not contain a part dedicated to human rights, unlike the European Digital Services Act. Therefore, this opens a window of opportunity for Ukraine to determine the legal relations between Big Tech actors and users in the context of information warfare because great opportunities entail great responsibility for seizing them.

Center for Strategic Communication and Information Security